I have been training resnet models on my Windows desktop machine with big GPUs. Now I need to deploy these models on a Jetson Xavier for real-time predictions.

My model format historically has been the Keras .h5 file. So now I need to convert the .h5 model file into the onnx exchange format.

There are two steps:

1) convert the .h5 to a frozen .pb format – this step is done on the desktop machine

2) convert the .pb format to the onnx format – this step is done on the Jetson.

On the PC

# python

from keras.models import load_model

# you can either load an existing h5 model using whatever you normally use for this

# purpose, or just train a new model as save using the template below

model = load_model('model.h5',custom_objects=models.custom_objects())

# save in pb froozen pb format, this format saves in folder

# and you are supplying the folder name

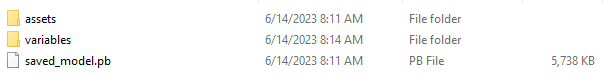

model.save('./outputs/models/saved_model/') # SavedModel formatAfter running this python script, the folder saved_model will contain:

On Jetson

Assuming the tensorflow2 (Jetpack-5.0) runtime has already been installed on the Jetson, and python3 with pip3, follow the install procedure in the tensorflow-onnx project, but use pip3:

https://github.com/onnx/tensorflow-onnx

pip3 install onnxruntime

pip3 install -U tf2onnxThen copy the entire folder saved_model folder from the PC to the Jetson workspace.

From a command line in Jetson, run the following command:

python3 -m tf2onnx.convert --saved-model path_to/saved_model --output model.onnx

Now, in the location where the command was run, there will be new file called model.onnx